For #SwymHackathon2023 We trained and build our chatbot that has learned from Swym help articles. I will share how easy it is for anyone to train chatbot and that will reduce a significant amount of incoming support tickets.

Introduction

llama-index a python package will provide us easy way to connect with LLMs. They are pre-trained on large amounts of publicly available data and we can train LLMs with our own private data.

Step 1

Install package

pip install llama-index

Step 2

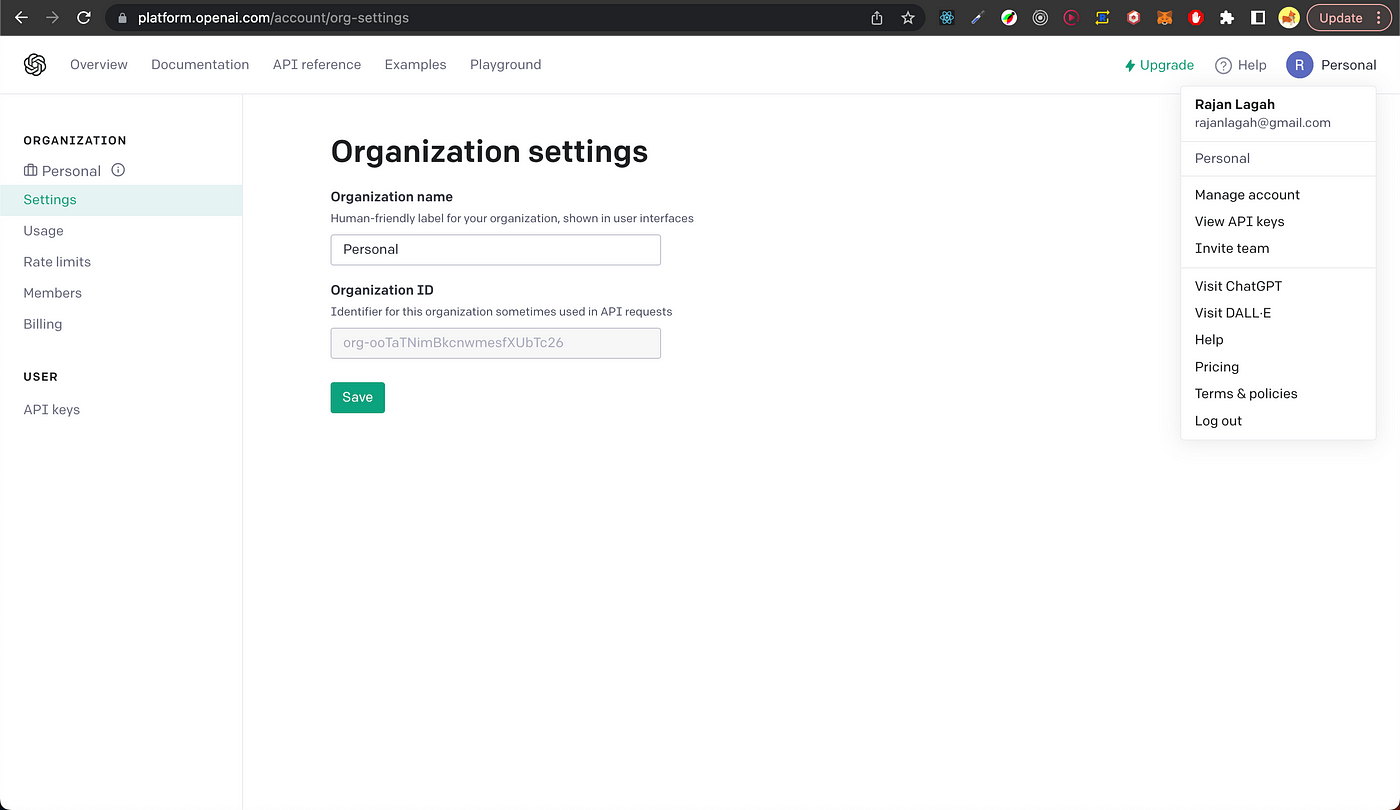

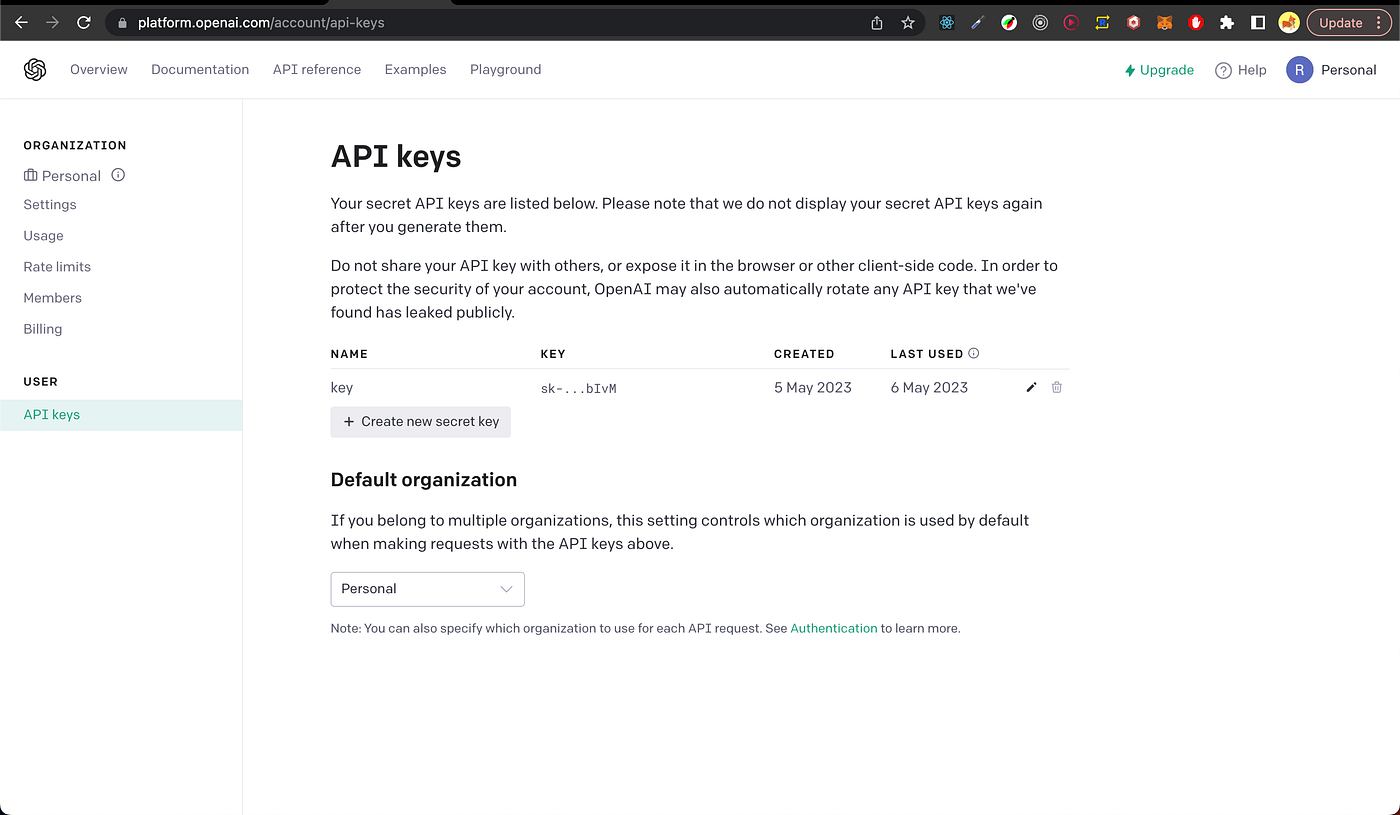

Go to https://platform.openai.com/ and signup.

- Click on “View API keys”

- If you dont see any key create your API key by clicking “Create new secret key”

Step 3

Coding

import os

os.environ['OPENAI_API_KEY'] = '<API_KEY>'

from llama_index import GPTVectorStoreIndex, SimpleDirectoryReader

from llama_index import download_loader

from llama_index import download_loader

# create a youtube download loader object

SimpleWebPageReader = download_loader("SimpleWebPageReader")

# load the youtube_transcript reader

loader = SimpleWebPageReader()

# generate the index with the data in urls.

documents_swym = loader.load_data(urls=[

'https://swym.it/help/developer-documentation/',

'https://swym.it/help/enabling-wishlist-plus-through-your-shopify-site-menu/',

])

index_swym = GPTVectorStoreIndex.from_documents(documents_swym)

query_engine = index_swym.as_query_engine();

query_engine.query('What is swym wishlist?').response

Swym Wishlist is a feature that allows customers to save items they are interested in purchasing, receive reminders about those items, and receive alerts when those items are back in stock or have a price drop. It also allows customers to sign up for marketing campaigns and track user activity on adding items to their wishlist.

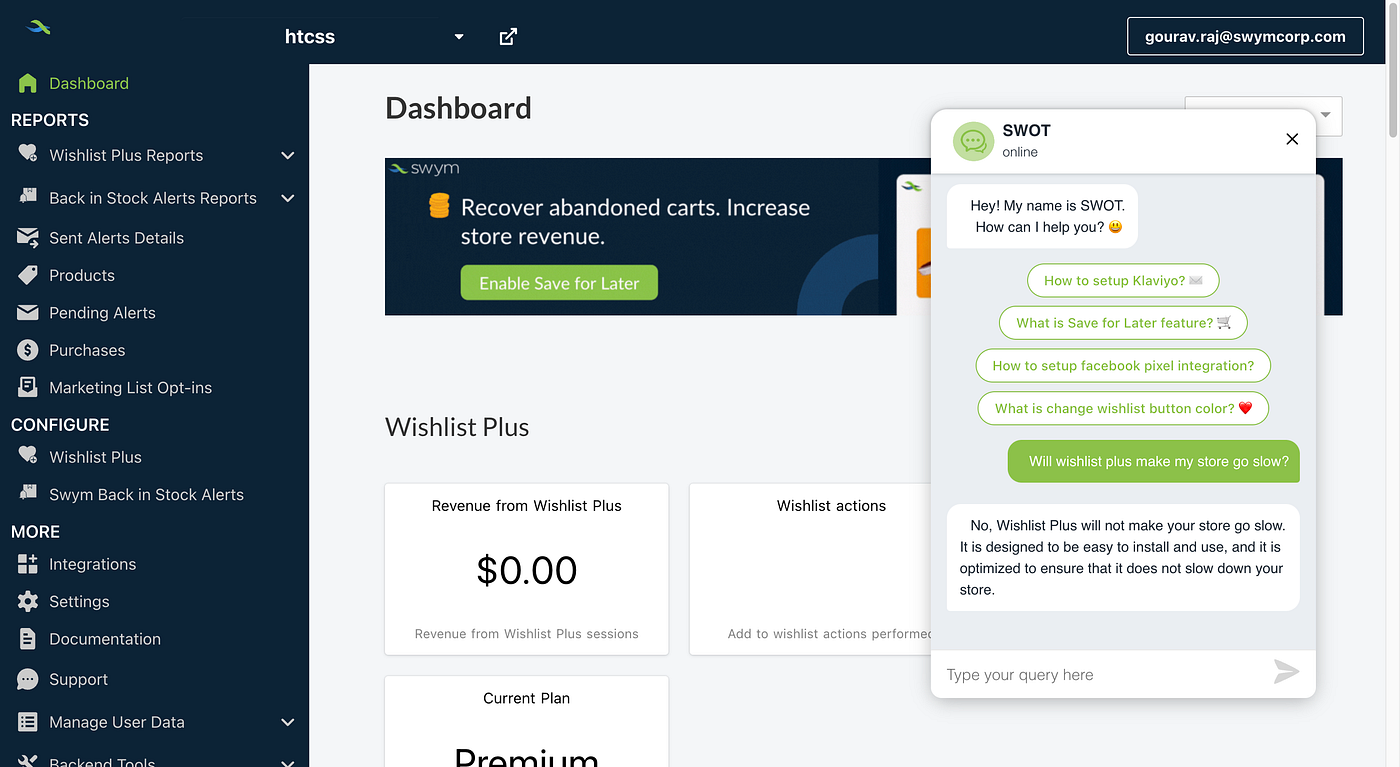

So our chat bot is ready. Just pass in more data for better results.

Wrap up comments

You can train the model and save it for future use as well.

to save model

index_swym.storage_context.persist()

this will create a new folder and save all the weights and config for the model. You can save those and when you need them you can load them using

from llama_index import StorageContext, load_index_from_storage

storage_context = StorageContext.from_defaults(persist_dir='./Model')

index = load_index_from_storage(storage_context)

You will also need UI for others to interact.

Thank you for reading and I hope you have learned something from this article.